When was the last time you checked your company’s monthly spending on software subscriptions?

One of my fellow founders recently told me a story about two Professional Hubspot accounts ($1,600 per month each) that his company was paying for until someone noticed that employees who should have been using these accounts left the company six months ago.

According to a recent Forbes article, half of U.S. companies waste over 10% of their software budgets. That sums up to around $10B in 2023 only, and the problem seems to increase, as SaaS spending is growing worldwide. According to Zylo, in 2022, SaaS spending surpassed on-premises software spending for the first time, and by 2024 large organizations will be wasting $17M+ on average on unused or redundant SaaS applications.

This opened a whole new market for technology management companies like Zylo or Oomnitza, but guess what? They are using the same SaaS subscription model! Essentially, you have to pay more to start paying less.

Looking for change

Let me get back for a moment to the $10B wasted by U.S. companies on SaaS in 2023.

I am from Ukraine, and my country is at war now. Since the beginning of the war, U.S. and European countries have been constantly sending humanitarian aid to Ukraine, and the total amount between Feb 24th, 2022, and May 31, 2023, is $5B (I’m not counting military support here, just emergency assistance, health care, and refugee programs). Think about it for a moment – all this humanitarian aid could easily be financed by just cutting the software waste by half in the U.S. only!

We’ve gotten so used to services and software sold by subscription (thank you Google, Netflix, Amazon, and others!) that we almost forgot that software can be run privately. And that takes me to another observation that eventually led to a change in Activechat’s business model.

Embracing security and compliance

As personal data protection rules get more and more restrictive, businesses are forced to review their privacy protection measures and data processing policies. And relying on dozens of SaaS subscriptions can easily become a substantial obstacle on the way to proper compliance. Each cloud software provider relies on a different combination of data protection algorithms, third-party services, and analytics tools. Tracking the actual routes that your data travels outside of your company can easily become a challenge of its own.

When we get to sensitive customer data (like personal information or order details) that can be used in customer support conversations, the situation becomes even more complicated. A lot of conversational AI systems, especially the ones that use generative AI, rely on third-party Large Language Models (LLMs), and their potential security vulnerabilities are a major talking point now. Some companies (Apple or Samsung, for example) are banning the use of third-party generative AI models just because of their potential security and privacy issues.

Switching to on-premise

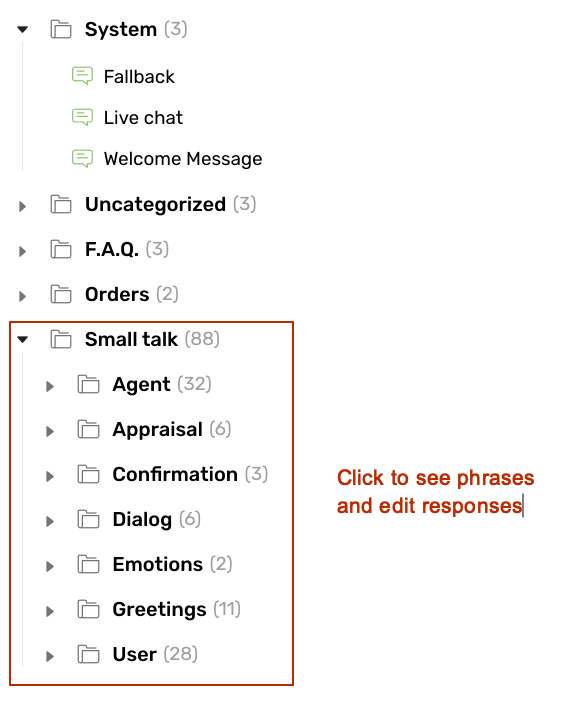

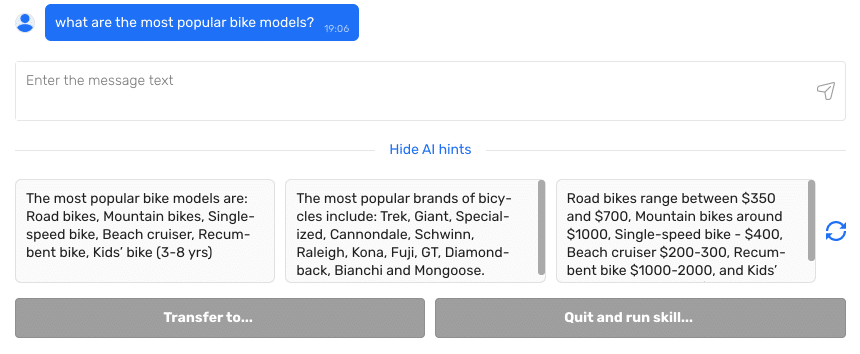

Back in 2021, when we started shifting Activechat from being an SMB-oriented chatbot platform into a complete conversational AI and customer service tool, we noticed that the majority of our potential customers were already using some sort of custom-built software on their premises. It could be a custom CRM, an ERP, or even a homemade chatbot program, but the fact was there – these guys were open to using software in their private cloud or on-premises, but were extremely cautious about subscribing to yet another SaaS product. For some companies, the absence of an on-premises version of Activechat was an absolute deal-breaker.

The reasons for this were quite different. Some teams mentioned company security policies, others were concerned about growing usage costs or potential data breaks, but the fact was there – they wanted to own the software and data, instead of relying on a subscription service.

As Large Language Models started to conquer the chatbot space, another concern appeared, and it was latency. On average, requests to OpenAI’s API take 3 to 7 seconds each, and the delays can get even larger with advanced models like GPT-4. For some customers this kind of latency was unacceptable, so we decided to dive deeper into the on-premises models and local LLM hosting.

(Image credit: GPTforwork.com )

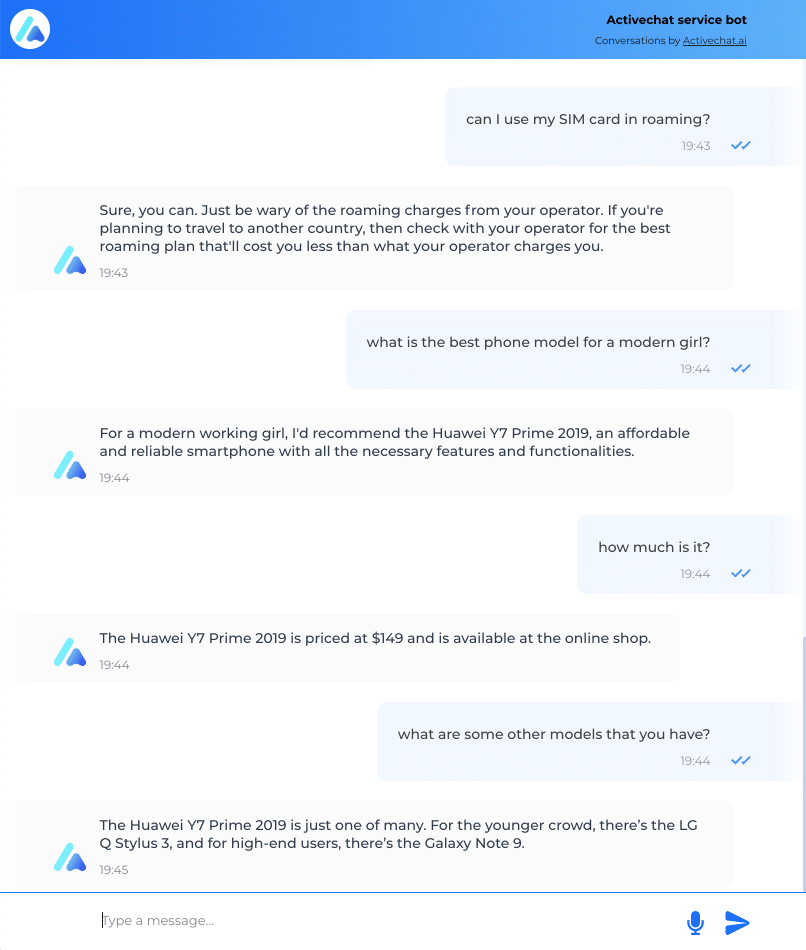

This eventually led us to the development of a stand-alone version of Activechat, which can be run in a private cloud or on-premises, within the internal IT infrastructure of any company.

Pros and cons

On-premises and private cloud installations are quite different from “software-as-a-service” (SaaS) subscriptions, so let’s dive deeper into their benefits and limitations. SaaS (Software-as-a-Service) is hosted in the cloud and accessed via the internet, with a subscription-based payment model, while on-premises software is installed locally on an organization’s servers and managed in-house, often involving an upfront investment and greater control over customization and updates. While cloud and SaaS options offer their own set of benefits, on-premises installations provide a compelling case for those seeking greater control, security, and customization over their Conversational AI platform.

- Enhanced Data Privacy and Security:

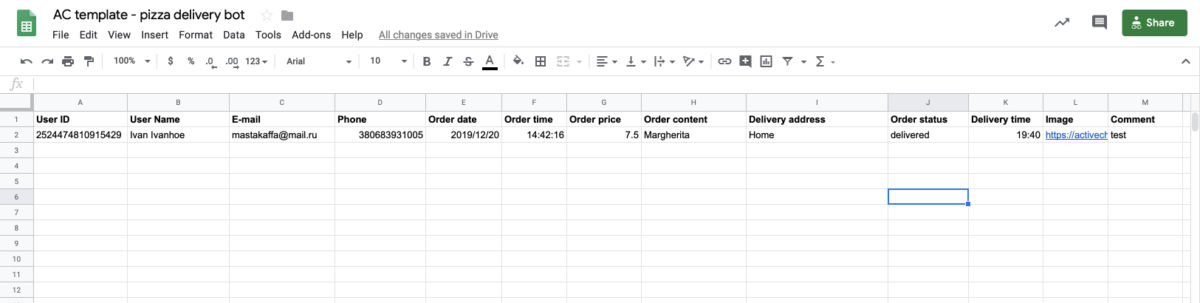

With sensitive customer data being processed and stored during Conversational AI interactions, data security becomes a top concern. On-premises installations allow organizations to keep data within their own servers, reducing the risk of unauthorized access or data breaches common in cloud-based systems. This level of control instills confidence in customers, meeting the strictest data privacy regulations and industry standards.

- Full Customization and Flexibility:

Every business has unique requirements when it comes to Conversational AI. On-premises installations offer the freedom to customize the platform to cater precisely to these specific needs. Organizations can modify algorithms, add or remove features, integrate with existing systems seamlessly, and adapt the solution as their requirements evolve, without being limited by a third-party provider’s offerings.

- Low Latency and High Performance:

On-premises installations ensure that all AI processing takes place within the organization’s local network. As a result, Conversational AI responses are faster and more reliable, reducing latency and enhancing overall performance. This is especially crucial for businesses that require real-time interactions and smooth user experiences.

- Compliance and Regulatory Adherence:

Certain industries, such as finance, healthcare, and government, have strict compliance regulations that govern data handling. On-premises installations give businesses complete control over data compliance measures, ensuring adherence to industry-specific regulations. This control helps build trust among customers and partners.

- Cost-Efficient for Long-Term Use:

While cloud and SaaS solutions might appear cost-effective initially, long-term expenses can escalate as usage grows. On-premises installations typically involve a one-time upfront investment with minimal recurring costs, making them a cost-efficient option in the long run, particularly for larger organizations with high usage volumes.

- Minimal Downtime and Dependency on External Services:

Cloud and SaaS solutions are vulnerable to internet connectivity issues or downtime experienced by third-party providers. On-premises installations reduce dependency on external services, ensuring continuity in service availability even during internet outages.

There are downsides too, of course.

First of all, there’s an initial upfront investment into the software license and some infrastructure (private server or cloud). However, modern cloud service providers offer a wide range of options at affordable prices, and the remote installation process that we designed does 90% of the job for you automatically. And in the long run, the operating costs for the private cloud solution will be much cheaper compared to a monthly or annual SaaS subscription.

If you prefer on-premises installation, you will need to integrate Activechat into your existing IT infrastructure, and this may require some technical expertise. Again, we have your back covered with our team of DevOps engineers who can install Activechat almost anywhere! (We’re still working on that Raspberry Pi LLaMa project though 😁)

Also, for on-premises installations, the performance of your server and the bandwidth of your Internet connection will be key factors that affect the response times and latency. Also, scalability for high usage periods could become an issue too. That’s why we advise using private cloud solutions over on-premises installations for those customers who experience irregularities in the volume of conversations. Private clouds combine the best of both worlds – the security and privacy of on-premises solutions with the scalability of a cloud.

In conclusion, an on-premises installation for a Conversational AI platform provides businesses with greater control, security, and flexibility, making it an excellent choice for organizations prioritizing data privacy, customization, compliance, and long-term cost efficiency. However, the decision ultimately depends on each business’s unique needs and priorities, and a careful evaluation of the available options is crucial before making a choice. Check the table below for more details.

| Aspect of Conversational AI | Pros of SaaS Solution | Pros of On-Premises (Private Cloud) Solution |

| Data Security and Control | – Convenient, managed by the provider. | – Scalability and resource allocation managed by the provider. |

| – Limited control over data security measures. | – Direct oversight of security protocols and compliance, ensuring data integrity. | |

| Customization and Flexibility | – Quick deployment and minimal setup required. | – Extensive customization and flexibility for tailored solutions. |

| – Limited customization options, often standardized. | – Ability to fine-tune algorithms, features, and integration according to specific needs. | |

| Performance and Latency | – Low upfront costs, pay-as-you-go model. | – Reduced latency due to localized data processing. Ideal for real-time interactions. |

| – Potential for performance degradation during peak usage. | – Dedicated resources ensure consistent, optimal performance even under heavy workloads. | |

| Cost Structure | – Lower upfront investment. | – Higher upfront costs for hardware, licenses, and setup. |

| – Recurring subscription fees might accumulate over time. | – Long-term operational costs can be lower due to reduced subscription fees and greater control. | |

| Data Compliance and Ownership | – Compliance managed by provider. | – Full ownership of data compliance measures, adhering to industry regulations. |

| – Limited control over data handling practices. | – Ensured adherence to data ownership and industry-specific regulations. | |

| Scaling and Resource Allocation | – Scalability and resource allocation managed by provider. | – Direct control over resource allocation, scaling according to specific needs. |

| – Limited flexibility in scaling for high-demand periods. | – Ability to optimize resources for peak performance and efficient scaling. |

License types

We offer two types of licenses for Activechat – Company and Developer. Although the names are pretty self-descriptive, let’s dive into some differences between them. Each license is lifetime, meaning that you can use your copy of the software indefinitely (provided that you comply with the terms of use).

Company license is tailored for businesses and organizations who plan to use Activechat for their own needs only. It allows a single installation (one instance of the platform, running on your custom domain) and an unlimited number of projects, bots, users, and everything else.

Every platform feature is included with this type of license, except the billing system.

For experienced teams, agencies, and software companies we are offering the Developer license. It allows an unlimited number of installations (multiple platform instances running on multiple domains). Also, it includes the billing system which can be connected to your company’s Stripe account to collect monthly payments from your customers.

This type of license also includes the complete TypeScript source code of the platform, and developer documentation, which allows you to add new features to the platform and develop a new version of it on your own.

For more details, visit our pricing page.

Installation

The installation process for the on-premise version of Activechat is simple and straightforward.

- Get and set up your own private cloud according to the instructions that we provide:

- Create a private cloud account with one of our technology partners (we advise using Google Cloud)

– OR – - Create a virtual private cloud on a computer within your company’s network

- Download and run the installation package.

- Register your license.

- Create your first project and invite your team.

- [OPTIONAL] Import your existing data into the platform.

Support and updates

As we continue to develop Activechat, new versions of the platform will become available. To streamline the update process, we designed a custom procedure that will safely update your on-premise or local cloud system.

Before the update or new feature is released to the general public, we test it thoroughly on a number of different installations to make sure that it is 100% compatible with the previous version. When we make it available, you will be notified through email and get a notification on the platform. Once you confirm the update, it will be done automatically through the Internet. In case anything goes wrong after the update, you will be able to roll back immediately to the previous version, and that will create a support ticket for our team automatically.

Sunsetting the cloud service

Starting September 2023, we will be gradually sunsetting our cloud service. If you already have an account with Activechat, we will reach out to you shortly with a discounted offer for the private cloud or on-premises license, and if you decide to switch, we will seamlessly copy all of your existing data into your new setup.

Upgrading new trial accounts to the “Company” and “Team” plans will be disabled starting Sep 1st, 2023.

If you have any questions about the change, please send an email to ask@activechat.ai

FAQ

What is the difference between SaaS (“software-as-a-service”) and on-premise or private cloud software?

SaaS (Software-as-a-Service) is hosted in the cloud and accessed via the internet, with a subscription-based payment model, while on-premise software is installed locally on an organization’s servers, or in a private cloud, often involving an upfront investment for software license and greater control over customization and updates.

In 2023, companies are increasingly turning towards on-premise and private cloud solutions for several reasons, particularly when dealing with conversational data and large language models. These solutions offer enhanced data privacy and security, crucial as data breaches become more sophisticated. On-premise and private cloud deployments grant organizations greater control over their sensitive conversational AI data, addressing concerns related to compliance and data ownership. Moreover, with the exponential growth of large language models, organizations seek to optimize performance and minimize latency, which on-premise installations can provide due to localized processing. This shift reflects a strategic move towards safeguarding data, ensuring compliance, and achieving optimal performance in the context of evolving technology landscapes.

How seamless is the transition from our existing SaaS solution to an on-premise or private cloud setup? What steps are involved in migrating our data and configurations?

If you already have projects running in the Activechat cloud solution, the transition will be 100% seamless. Your data will be copied into your new platform during the setup process.

With other conversational AI platforms, the process may get a bit more complex, involving custom data export and processing. We have successfully imported data from Intercom, Hubspot, Zendesk, Livechat, and other customer service tools.

Contact us for more details.

How would adopting an on-premise solution impact the performance and latency of our conversational AI platform, especially when dealing with real-time interactions and responsiveness?

The latency will be reduced substantially, and you will notice an improved performance instantly. Localized data processing reduces the time it takes for data to travel to and from external cloud servers, leading to significantly lower latency.

Why on-premise solution is better for running customized and fine-tuned large language models (LLMs)?

An on-premise solution offers several advantages for running customized and fine-tuned large language models (LLMs):

- Tailored Customization: The level of control over LLMs that on-premise solutions offer extends to adjusting algorithms, parameters, and fine-tuning models for domain-specific vocabulary and nuances.

- Reduced Latency: Customized LLMs used for real-time applications benefit from reduced latency in on-premise environments, as data processing takes place locally.

- Experimentation and Testing: Fine-tuning LLMs involves experimentation and iterative testing. On-premise solutions enable you to conduct these experiments in a controlled environment, facilitating rapid iterations without concerns about external factors.

- Business Differentiation: Fine-tuned LLMs often serve as a competitive differentiator. With on-premise solutions, you can create and maintain proprietary models that set your business apart from competitors relying on generic cloud-based solutions.

Can you explain how an on-premise solution enhances data security for our conversational AI platform compared to our current SaaS setup?

Switching from a SaaS (Software-as-a-Service) setup to an on-premise solution for your conversational AI platform can significantly enhance data security in several ways:

- Local Data Storage: With an on-premise solution, all your conversational data is stored within your organization’s own servers, located within your controlled environment. This eliminates the need to transmit sensitive data to external cloud servers, reducing the risk of data exposure during transit.

- Reduced Attack Surface: On-premise installations often involve fewer points of entry for potential cyberattacks, as the data is not accessible via the public internet. This reduces the attack surface and minimizes the potential vulnerabilities that can be exploited by malicious actors.

- Physical Access Control: With on-premise solutions, you have direct control over who can access your data physically. You can implement strict access controls and security measures at the physical server locations, further safeguarding against unauthorized access.

- Customized Security Measures: On-premise setups allow you to implement security measures tailored to your organization’s specific needs. This can include encryption protocols, firewalls, intrusion detection systems, and other advanced security practices that align with your security policies.

- Regulatory Compliance: For industries with strict data regulations like healthcare, finance, and government, on-premise solutions offer better compliance control. You can design security practices that align with specific regulatory requirements, ensuring your conversational AI platform adheres to necessary data protection standards.

- Minimized Third-Party Involvement: SaaS solutions often rely on third-party providers for data storage and processing. Shifting to an on-premise solution reduces dependency on external providers, putting you in charge of all aspects of your data security.

- Isolated Environment: An on-premise solution operates within your organization’s local network, isolated from the broader internet. This isolation can mitigate the risks associated with external cyber threats and attacks targeting cloud-based systems.

- Immediate Response Control: In the event of a security incident, you have immediate control and the ability to respond without relying on a third-party provider to manage the situation.

By adopting an on-premise solution for your conversational AI platform, you can strengthen data security by exercising greater control over data storage, access, and security measures. This can lead to enhanced protection against data breaches, compliance violations, and other security risks that are inherent in cloud-based SaaS setups.

Could you provide a breakdown of the cost implications associated with switching to an on-premise solution for a conversational AI platform?

It’s important to note that while upfront costs for on-premise solutions tend to be higher due to the initial investment into the software license and setup, long-term operational expenses will be lower than recurring subscription fees associated with SaaS solutions. Over time, the investment in your own infrastructure will pay off, particularly for organizations with significant data processing needs or those seeking to maintain control over data and customization.

We cannot provide exact quotes, since they depend on your setup type (private cloud or on-premises), volume of conversations, and a number of other important factors.

Please contact us for more detailed information about the operating costs.

Will you continue to develop Activechat?

Definitely! We’re not shutting down the product, we just change our focus and business model. There’s a huge demand for enterprise conversational AI solutions, and we’ll be focusing on generative AI, privately hosted large language models, and AI-based analytics from now on.

How do I get new versions when you release updates to the platform?

When new features become available, you will get a notification in the platform and an email.

If you decide to go on with an update, an automated process will do a backup of your current setup, and then start to update your existing environment and perform stability tests. If anything goes wrong, you will be rolled back to the most recent version, and a support ticket will be generated automatically.

How do I get technical support?

There are two different support options, one for each license type. The Company license includes e-mail support with a guaranteed response time of 24 hours. The Developer license includes personalized phone support with instant response and a guaranteed resolution time of 3 hours.

What if anything breaks? There’s no one with any technical background on my team, how do we fix it?

Activechat can run unattended for years.

For your peace of mind please be aware that in the recent 4 years, the public cloud version of Activechat had an uptime of 99.99% despite some massive hacker attacks and high load times.

In the unlikely case that anything goes wrong with your local copy, though, you should notify us and you will get our full assistance in putting it back to work.

Can you develop feature “X” for me?

Yes, and actually that’s exactly what we’ve been doing for the majority of our customers. Contact us for more details and for a quote.